Etica Funds, in collaboration with the international coalition Stop Killer Robots, issues an urgent appeal to the investment community: it is necessary to promote international regulation of autonomous weapon systems—technologies capable of identifying and striking targets without human control.

The rapid evolution of artificial intelligence in the military sphere is opening scenarios that raise profound and cross-cutting questions. For Etica Funds, committed to promoting a responsible and sustainable economy, understanding the nature and serious implications of autonomous weapons means reflecting on the values we want to defend, on the criteria that will underpin innovation and security, and on the future we are helping to build.

The reflection on these issues concerns society as a whole, and it is important to foster debate to raise awareness, so that artificial intelligence remains a tool at the service of humanity—and not a threat to it.

Moreover, in the financial sector, international regulation plays a crucial role in reducing uncertainty and mitigating risks. This constitutes a fundamental prerequisite for long-term oriented investors, particularly when capital allocation decisions are guided by environmental and social sustainability principles.

What Are Autonomous Weapon Systems?

Lethal Autonomous Weapon Systems (AWS) are weapons characterized by operational autonomy, able to function without direct human intervention. Their autonomy is made possible by the combined use of sensors, visual recognition systems, algorithms, and advanced artificial intelligence (AI).

In the absence of a precise legal definition, autonomous weapons are often classified according to the level of human control in the decision-making cycle:

- Human-in-the-loop: every decision requires approval from a human operator.

- Human-on-the-loop: the machine operates autonomously but under limited human supervision, with only narrow windows for intervention.

- Human-out-of-the-loop: the system acts in complete autonomy, with no direct human control at the moment of engagement. This is the configuration most closely associated with so-called “killer robots.”

The use of these weapons is no longer confined to experimentation but is already a reality in operational missions. Yet, at the global level, there is still no shared legal definition of an autonomous weapon system.

Why Reflect on This Issue?

To fully grasp the meaning and scope of using autonomous weapons, one must analyze their main implications from legal, technical, ethical, and strategic perspectives:

- Legal issues: they violate international humanitarian law, especially the core principles of distinction (e.g., differentiating civilians from combatants) and proportionality (weighing collateral damage of an attack), creating accountability gaps.

- Technical issues: even the most advanced systems are prone to errors (which may increase in complex scenarios), cybersecurity vulnerabilities, and operational unpredictability.

- Ethical issues: delegating decisions on the use of force to machines reduces people to objects, data points, or sets of coordinates—violating human dignity.

- Strategic implications: the technology risks becoming a driver of conflict escalation and arms races.

The Role of Autonomous Weapons in Today’s Geopolitical Context

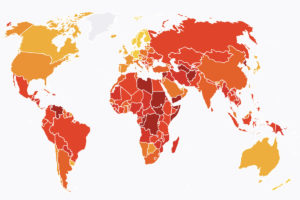

All this is happening in a global environment marked by a new phase of technological rearmament. The SIPRI Yearbook 2025 reports the start of a multipolar escalation involving the United States, Russia, China, and regional powers such as India, Pakistan, and Israel. International tensions—from the conflicts in Ukraine and Gaza to the war between Iran and Israel—are accelerating the deployment of emerging military technologies: drones, robots, lasers, and AI-integrated systems.

According to estimates, the global advanced military technology market reached a value of USD 22.17 billion in 2025, with forecasts of growth to USD 38.10 billion by 2034 (Precedence Research, 2024).

In the United States, the Department of Defense portfolio includes 800 projects related to military AI (Centre for International Governance Innovation, 2024), while experts from the Future of Life Institute have tracked around 200 autonomous weapon systems already deployed in real-world scenarios in Ukraine, the Middle East, and Africa (Reuters, 2025).

Public Opinion and Regulatory Outlook

The debate on autonomous weapon systems increasingly involves civil society. According to an Ipsos survey (2021) conducted in 28 countries, 61% of respondents opposed their use. In Italy, opposition is even stronger: 74% of the population rejects their deployment, pointing to concrete risks, including technical and operational ones (Archivio Disarmo, 2025).

This growing public concern has strengthened the institutional debate: in 2024, the UN adopted Resolution 79/L.77, establishing a multilateral consultation forum under the aegis of the UN General Assembly, paving the way for possible negotiations on a legally binding treaty by 2026.

This is a significant step, acknowledging the urgency of regulating the military use of artificial intelligence and bridging the gap between rapid technological advances and the slower pace of legal evolution.

The Stop Killer Robots Campaign and the Investor Statement

Momentum for a new international norm is also driven by civil society. The Stop Killer Robots Campaign—a global coalition active in over 70 countries with more than 270 organizations—has played a decisive role in bringing the issue of autonomous weapon systems onto the international diplomatic agenda.

The Campaign advocates for the adoption of an international treaty banning autonomous weapon systems and requiring meaningful human control.

In this context, Etica Funds and Stop Killer Robots appeal to institutional investors, inviting them to sign the Investor Statement: a declaration urging governments worldwide to begin negotiations on a new legally binding norm on autonomous weapon systems, with two key objectives:

- to ban the development and use of autonomous weapons that target humans;

- to ensure meaningful human control over all decisions related to the use of force.

At the end of 2025, 29 institutions, with total assets exceeding 205 billion dollars, have already signed the Statement, confirming the growing attention to such a highly relevant issue.

Marco Carlizzi, Chairman of Etica Funds

“Allowing machines to decide over life or death is unacceptable: it means crossing an unbridgeable boundary for humanity. Every autonomous weapon represents a renunciation of our responsibility and our dignity as human beings. These systems are not just a new generation of armaments: they mark a paradigm shift in the relationship between war, technology, and humanity, with challenges that go beyond the legal dimension. In a time marked by conflicts that claim civilian lives every day and massacres that weigh on the collective conscience, the use of autonomous weapon systems further amplifies the dehumanization of war and distances us from any prospect of peace. For this reason, Etica Funds, together with the Stop Killer Robots coalition, urges the investment community to press for the urgent adoption of binding international rules to ban killer robots.”

Etica Funds: 25 Years of Finance Without Weapons

The initiative with Stop Killer Robots is part of the program with which Etica Funds celebrates its first 25 years of history. In this quarter-century, Etica’s funds have never invested in companies involved in the production, use, maintenance, distribution, or storage of conventional weapons, nor—much less—in controversial weapons or their key components, such as landmines, cluster bombs, and nuclear weapons. This is a strong stance, consistent with Etica’s commitment to countering the negative impacts of the arms industry on people, territories, and economic balance.

At the same time, Etica Funds is actively engaged in advocacy against nuclear weapons, with the goal of halting the financing of the production and maintenance of nuclear arsenals.

Please read the legal notes.